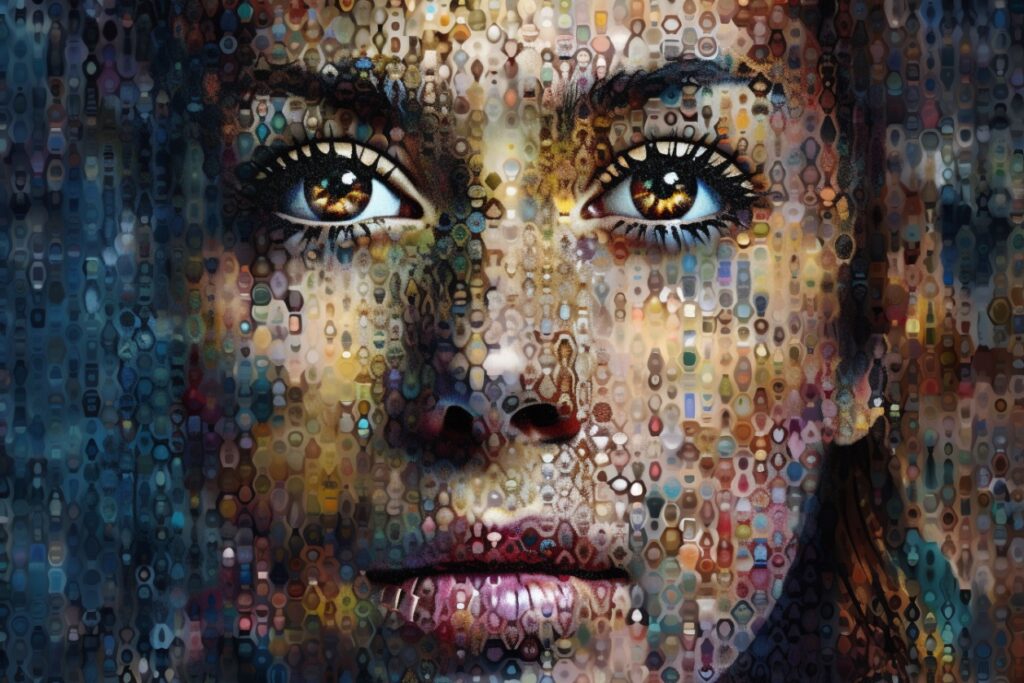

In this series of articles, I explore the fascinating realm of Generative AI, as models of concentrated intelligence, and their profound impact on our society.

By tapping into the vast collective mind, digitization has enabled us to access the accumulated knowledge of humanity since the invention of writing.

Join me as we explore this intriguing topic in greater detail and uncover the exciting possibilities it presents.

A Glimpse of the Future

In 2060, David dreams of becoming the best defense attorney in the country. After losing his best friend under heart-breaking circumstances, he vowed to prevent any woman from enduring domestic violence under his watch. He is a fourth-year student, and today, he is taking his most important exam of the year.

There is only one supervisor in a room of 52 students. The senior shepherd devours her blue book, while the school’s AI monitor scrutinizes candidates.

David looks very confident. He is good at case-solving patterns. Since he has an excellent visual memory, he also has a good toolbox for cases and amendments. However, deep inside, he is stressed by his average analytical skills in evidence analysis and forensic correlation abilities. To pass the exam, he has permission to use the Internet, the LegalGPT AI model, and the online state court database.

David articulates his dossier like a virtuoso. His first composition is made of brief sentences. Subsequently, he links these pieces of evidence to references and precedents from previous cases and legal decisions. Shortly after, the legal argument is a dense one-pager. Next to none, using LegalGPT, he generates his entire lawsuit, a symphony of 27 pages written in perfect legal language. Finally, he makes a few adjustments, then generates a new batch of updates.

And voila.

Satisfaction and relief radiate from his face while he submits his copy. He stands up, packs his stuff, then stops briefly as the supervisor interrupts his focus. The latter looks at him and says:

“40 years ago, I had to write those 27 pages. Obviously, it is the end of an era”.

Dorine UWATIMINA, law professor (retired), grand supervisor.

Beginning the Era of Augmentation

The launch of GPT3 API in 2021 marked the beginning of a new era: the age of individual augmentation as a service. We are now living in an era of thought materialization, in which one can manifest their desires simply by articulating them. Ideas are designed, illustrated, musically composed, rendered in 3D, explained, or revealed by the AI.

Companies like Google (BERT), OpenAI (GPT-4), and Meta (LLaMA) are revolutionizing the domain of deep learning. They mark a significant advancement in natural language processing: Large Language Models (LLM) are picking up the spotlights on the world stage.

This means we are experiencing the transition from “programming” to “narrating”.

It is a paradigm shift in which artificial intelligence overwhelmingly simplifies and amplifies 3/4 of the corporate work relying upon Information Technology such as development, user interface design, illustration, workflow, or reporting.

Generative AI is the digitized embodiment of our collective knowledge and expertise.

As a consequence, we are beginning the mass update of the cognitive-based work that is convertible into algorithms and crystalized by pure logic. It leverages the most popular high-level programming languages: human languages.

From now on, spoken languages directly translate to machine language as if you could translate them using Google Translate, except you use ChatGPT.

As programming gets one step easier, your engineering thinking system matters more than your coding skills.

The burning question

I hear your question: Am I going to lose my job?

The answer will come further down this series of articles. Long story short: it depends on your ability to adapt by learning a practice that is new for everyone.

Unlike any other disruptive technology, it has changed the rule of the game forever: people using AI are going to replace you.

And who are these people using and building AI? The adventurous, the curious, the experimenters, the techies, the entrepreneurs, the hustlers, the bad guys, and the future AI natives, our kids.

Homo Sapiens Sapiens vs Homo Auctus

Science is offering you a choice. For your own benefit, I am asking you to take the leap to understand what it is like to work with a digitized copilot and forge your thought opinion.

Should you take the red pill of adaptation, I recommend the following:

- Start by trying at least once ChatGPT, or Bing Conversation. The latter includes the GPT model and renews the search experience. It heightens the googling experience to a whole new level.

- Get acquainted with a Generative AI that is useful in your industry. For example Midjourney for generating images for email marketing.

- Discover how you can be productive with this technology. It is not a silver bullet, but you can instantly acquire an arsenal of skills.

- Build new habits so that you start feeling accustomed, connect the dots, and begin to improve your work until over-productivity.

- Think about how someone else using some AIs can replace you, then be that person: replace yourself with the new you, your augmented version.

Or simply ignore all of it, swallow the blue pill of comfort, and undergo the first “Great Upgrade”.

Eat your own dog food

I have been experimenting with OpenAI technologies since 2020 and used Google Dialogflow since 2018. I released my first chatbot, which answered regulatory questions about GPDR and PSD2. Developing with Natural Language Processing (NLP) was an eye-opener. I concluded chat provides the ultimate user experience for interacting with machines. It all sounds so obvious now, yet it was not back then despite all the buzz around Siri, Google, and Alexa.

I did the exercise of working within AI augmentation on my experiments since GPT-3 came out. Considering the hard skills, the conclusion is daunting: Generative AI can perform most of what I know and what I am mentally capable of. I can safely state I am outperformed in some areas.

In addition, AI is simply miles away in terms of depth of knowledge. Furthermore, it possesses infinitely better linguistic skills than mines when it comes to articulating ideas in languages other than French and English.

Yet the surprise comes from its ability to develop a simple idea and make it grow by putting words in concert. AI feels like the genius child of Humanity.

Words change the world

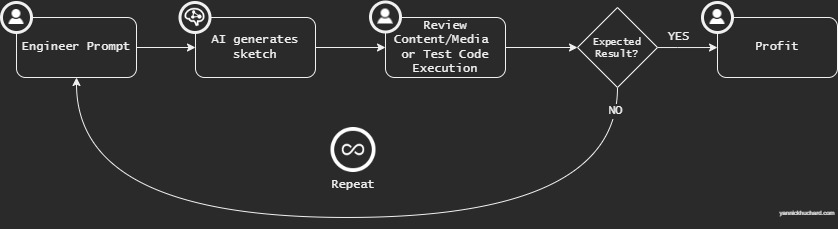

Generative AI comes with a new discipline: Prompt engineering. It consists in finding the right text, and the rights qualifiers that will narrate the desired output as close as you have imagined it.

For example, this prompt in Midjourney:

Prime Minister Xavier Bettel playing the finals of League of Legends world eSport championship at the Olympic games streaming on Twitch

generates the following picture:

Ultimately, prompt engineering uses natural language as a modeling interface to command the “commendable world”. The more there are smart systems and devices, the more words animate the world!

The widespread innovative applications based upon Generative AI marks the end of the road for this generation and the beginning of a new breed of workers and creators.

Yet, another finding is that we still need a “general assembly semantic”. It would choreograph a fuzzy set of ideas that will accurately animate the world based upon a well-written thought.

The assembly process, which can be summarized into the loop “decomposition-planning-action-correction”, will likely open the door to Artificial General Intelligence (AGI). Coupled with the widespread natural language programming interfaces (NPI), this is the real end game. In that matter, we are already observing some interesting experiments like AutoGPT as sparks of AGI.

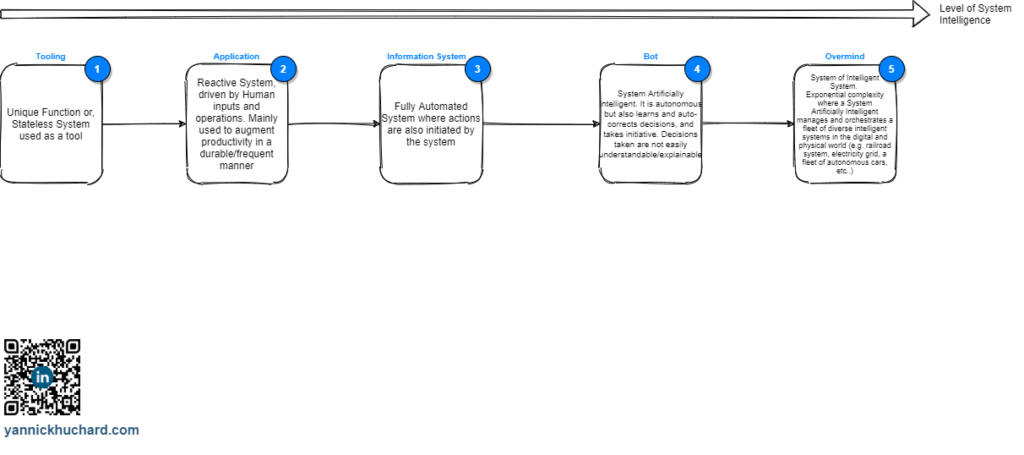

Transitioning from the Digital Transformation to Digital Augmentation

Picture this familiar situation.

Your maturity in terms of digital adoption is high. You are developing a culture of digital awareness, offering mobile-first customer interaction, and your brand is fighting for its visibility on social media. You have the feeling of doing great.

Congratulations.

Yet, the market atmosphere is heavy. You feel the pressure every week goes by. The competition is fierce, you are still looking for an army of IT engineers and data analysts for the last six months. Furthermore, customers get pickier because the offering is abundant. Your analytics tell you a client can switch in the blink of an eye if your experience does not meet his rising standards. Then, just when you thought you nailed it with your latest Instagram reels, it receives negative feedback. Even worst, there is a relentless wave of new product offerings mimicking yours. These startups and VCs are constantly trying to uncover the mythical unicorn while pushing your visibility back to Google’s page 2. And you feel this moment when your industry will be shackled, disrupted, or crippled may happen at any moment.

Who would have thought even Google’s dominance would be threatened?

Fortunately, there is a nascent vision. Transformation is not enough anymore. If you cannot obtain more skilled people now, why not acquire more skills for your people now?

AI is the key to unleashing your talents.

And, slowly, Augmented Work is the evolution of work, as we know it, characterized by these two elements:

- A human is the sole team leader of his digital workers: he has the Applications, Automatas, and specialized A.I. models for numerous parts of your job, such as programming, translation, video editing, illustration, design, and planning.

- Teams, as we know, will still exist, obviously, but augmented by AI also at the team level. The team has the opportunity to exist as an independent entity either in the company AI or as a single team companion if you need explicit segregation of duty. The “team spirit” has a whole new meaning with AI.

The flow of work evolves toward:

A. Human generates instructions using prompt engineering as explicit command requirements. The prompt is actually the evolution of the Command Line Interface (CLI), for a much greater general purpose.

B. AI generates a first draft

C. Human amend the sketch with input and then detail with new commands

D. Once the AI-driven engineering cycles are good enough for release change into the real world, you ship it for user acceptance or production if the risk is low.

- The interaction with the AI becomes talkative. Either by chat or voice. AI is your new colleague.

- AI starts having digital bodies, existing in a form of familiar avatars, and will be in multiple places: in your phones, your mixed reality glasses, in your Metaverse. Avatars could be Non-Player Characters (NPC), digitized versions of yourself, or even the retired expert that used to be your mentor.

So, am I going to be replaced by Artificial Intelligence?

You vs AI: you (still) have the upper hand

Here is a bet: 80% of white collars will keep their job. 20% of us will either refuse to learn these new tools to evolve either because of our fear of overwhelming technological advancement, or of conviction. Eventually, this minority will rush toward retirement and use these AI-powered services anyway to buy recommended stuff on Amazon after having been oriented by Google Bard from Google Search.

Why do I think that way? Because if we can produce much more with the same number of people, why would we deliver the same amount of products with fewer people?

Let’s take the example of Apple. The company entered the AI game in 2017 by introducing Core ML, an on-device AI framework embedded in iOS. The same year, it released the first generation of Apple Neural Engine (ANE) under the iPhone X with the A11 CPU.

Apple’s immeasurable impact comes from its ability to create and materialize an idea that is at the intersection of beauty, function, storytelling, and branding. Do you think Apple will push its culture of product excellence with the same amount of people amplified by a myriad of AI models, or will the company prefer reducing its workforce by leveraging more AI?

Pause for a second and think about it.

The other side of the coin

Taking the employer perspective in the era of AI Augmentation: what constitutes the difference between you and another candidate?

Any individual having a team of AI has the upper hand as he or she will be digitally augmented with skills and experience that usually takes years to acquire. What remains to develop are the skills to get used to these new abilities and use them at their best like an orchestra’s conductor.

You become the manager of AI teammates.

Hence, from the employer’s perspective, it results in hiring a virtual team vs an individual.

It raises the responsibility of Managers and the Human Resources department in the whole equation. Colleagues require to be upskilled to stay ahead, not only for the sake of the company but also to help them to keep building their personal value with respect to the market. Thus, leaders and HR have to set things in motion by organizing the next steps, while their own jobs are being reshaped and augmented…

Unlock the Future of Office Jobs Now

First, let’s admit once and for all you cannot win a 1 on 1 battle against AI, as much as you cannot win a nailing contest against a hammer.

The battle is long lost.

The battle doesn’t even make sense.

Because AI is the cumulative result of all humans’ knowledge, born from successful and failed experiments. To put it another way, as a sole individual, you cannot win against all of us and our ancestors combined!

And this is the incorrect mindset.

Hence, you will want to construct the future, your future, with all of us and our ancestors combined! You only need to be aware the future will be vastly different, and you should be part of the solution rather than engineering your problems.

AI is here to stay.

The questions to ask from now are:

- Are we all going to benefit from it?

- What portion of handcrafting do we want to keep?

- How much evil is going to benefit from it?

- How long until we get robots as widespread as vacuum cleaners?

- When are we going to find truly sustainable and clean energy? (no, batteries are not sustainable)

The key is here and now: you need to invest in algorithmic and analytical skills to translate activities to algorithms in order to be augmentable.

Next, the winning companies and communities will be the ones tapping into their people’s intelligence combined with creativity augmented by AI, the physical resources to change the world, and their abilities to satisfy needs within an enjoyable experience while maintaining a transparent and engaging conversation.

The gap between “good” and “best” will be even smaller between businesses, but the proposed experience and the branding will have a tremendous impact. Then, consistency and coherence in how you serve the customer and engage with your fans will act as compound interests. This is how you win the perpetual game.

The term community inherits a new meaning given the free aspect of AI. You are not even needing to build companies to achieve your goals: you only need an organization that plans and organizes the agreed work, like in Open Source Communities and Decentralized Autonomous Organizations (DAO).

Hence, I encourage you to build an A.I. readiness.

How to be A.I. ready?

Here are my recommendations to get started as an individual, especially if you are a leader in a company:

- “Socialize” with Generative AI applications useful to your job.

- Know your data and data systems to identify candidates for augmentation.

- Have “good” data. Good = true + meaningful + contextualized + accessible. As such, information must be stored in a secured and accessible location. Fortunately, Large Language Models are unstructured data friendly.

- Have technologists that can pioneer lateral ideas. I recommend hands-on architects.

- Assess and promote simple ideas on a regular basis, and establish an AI-dedicated project portfolio pipeline.

- Select and run a set of competent AI in a fully autonomous fashion

You can find a complete list of AI services at FutureTools.io and ThereIsAnAIForThat.com.

Less is not always more.

Less is more until you reach the “optimal zone”, an inflection point that represents the optimal balance between effort, cost, and result. Exponentiality occurs when for minimal effort and expenses, you achieve unprecedented results.

The critical factor is this natural law: everything is born from need, will be driven by purpose, feeds on energy, is protected by self-preservation, and evolves to maturity.

Thus, until AI is not given the aforementioned five elements at the same time, then, its digital self-preservation is never programmed to be mutually exclusive with the preservation of living beings, and finally, AI self-evolution stays within boundaries, then AI growth will not be at the expense of humanity. Under these circumstances, humans can remain the dominating species.

As a consequence, one must consider what gives birth to a “trigger”: this initial impulsion taking the form of an idea that results in action delivered by willpower from the mind’s womb. Until then, an AI will not willingly use another AI, automaton, or application because it needs to, but because it has been commanded or programmed by us.

Until then, we are safe.

We are… Fine… Aren’t we?

This is not the right question

The right question is what is going to change for me?

Earlier I said, “It depends on your ability to adapt by learning a practice that is new for everyone”.

The long answer starts with a twist: the groups of humans producing AI and the others using AI as elements of augmentation and amplification of their skills will have an exponential upper hand because they can fulfill needs faster, optimally, and accurately at the cost of… just… time.

For example, building the next Instagram will depend on someone having:

- The willpower

- A distinguishingly desirable idea

- A series of creative ideas

- The skills

- The drive to sell, communicate and promote their ideas to clients.

- The resilience to continue developing the ideas

We can conclude that what consistently makes the difference are: the idea, the drive, the skills, the way user experience answers the client’s needs, and the resources you can obtain to make things happen.

But if ideas are cheap and abundant, and should cognitive skills can be acquired using virtually free AI Augmentation, then the remaining differentiators are the drive, the user experience, and the resources.

Thus, the Intellectual Property of a company becomes its Cognitive Know-how. Suddenly, high-value assets are the doers displaying high and consistent motivation, leaders that not only keep the Pole Star lighten but are able to keep their teamates inspired: the creative people, and the group of people having the capacity to invest and evolve in the same direction around the same flag: their brand, which I consider to be the result of maintaining a homogeneous identity of the combined people and products.

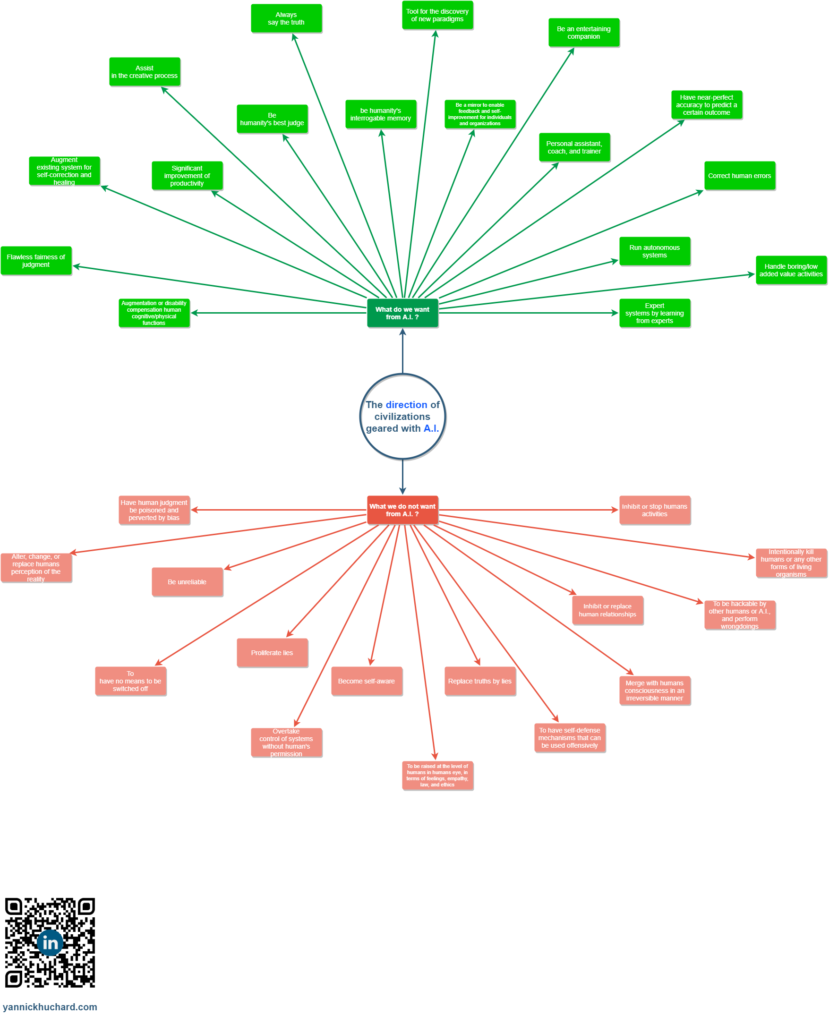

Graal or Pandora?

This new technology raises thousands of questions.

The development of Generative AI technology has opened up a vast array of possibilities, but it has also raised thousands of questions that need to be addressed.

For instance, one major question is how Generative AI will change our day-to-day interactions.

Furthermore, there is concern about whether this technology could lead to mass unemployment and economic inequality.

Another potential consequence is that it might devalue human creativity and originality.

Additionally, it is important to explore how Generative AI might impact human cognition and decision-making.

In terms of IT Engineering and Architecture, what is the impact of AI on these fields, and how will they adapt to this new technology?

Education is another area that could be significantly impacted, and it is worth considering how Generative AI might affect traditional learning methods.

Moreover, there is a concern that Generative AI could create a world in which we cannot distinguish between what is real and what is artificial. If this were to happen, what are the ethical implications?

Finally, the implications of Generative AI for democracy and governance are also important to consider, particularly with regard to its development and regulation.

Overall, the development of Generative AI technology raises many questions needing collaborative wisdom in order to fully prepare for its impacts on society.

I will attempt to answer these questions in upcoming articles of the “Navigating the Future with Generative AI” series.

Until then, if you are looking for the one thing to remember about this article: play with Generatice AI until it replaces just one activity of your daily routine, then boast your prompt engineering skills by spreading the word and educating your relatives.

🫡