What you are about to read might be the most unsettling—and necessary—thing you read about your career this year. It cuts against the grain of simplified narratives and offers a dose of reality about the monumental economic transformation we are entering. This 6th episode (of the “Navigating the Future with AI” series) is not just another article about AI. It is your personal GPS for navigating the Great Rearchitecture. Within it is a detailed plan designed to demystify what is truly happening, helping you to navigate the coming challenges while seizing the profound opportunities they create. It is your blueprint for moving from a position of uncertainty to one of relevance and power in the post-AI economy.

Business & Tech leaders, economists, and thinkers are all forecasting a worldwide shift, and the ground is already trembling. The common fear is one of simple replacement—that millions of workers will be made redundant by a new wave of artificial intelligence. While this fear is understandable, it misinterprets the present danger. The story is far more complex and has already begun.

The Great Reallocation of Capital: Understanding the Self-Fulfilling Prophecy

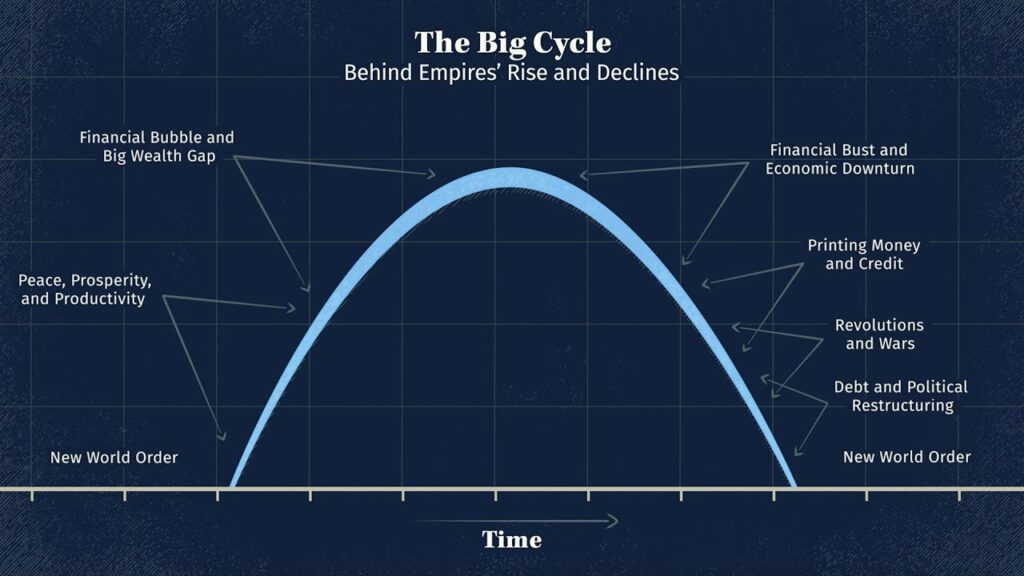

The Great Rearchitecture that is reshaping our professional world isn’t happening in a vacuum. It is being driven by a powerful, underlying financial current: The Great Reallocation of Capital.

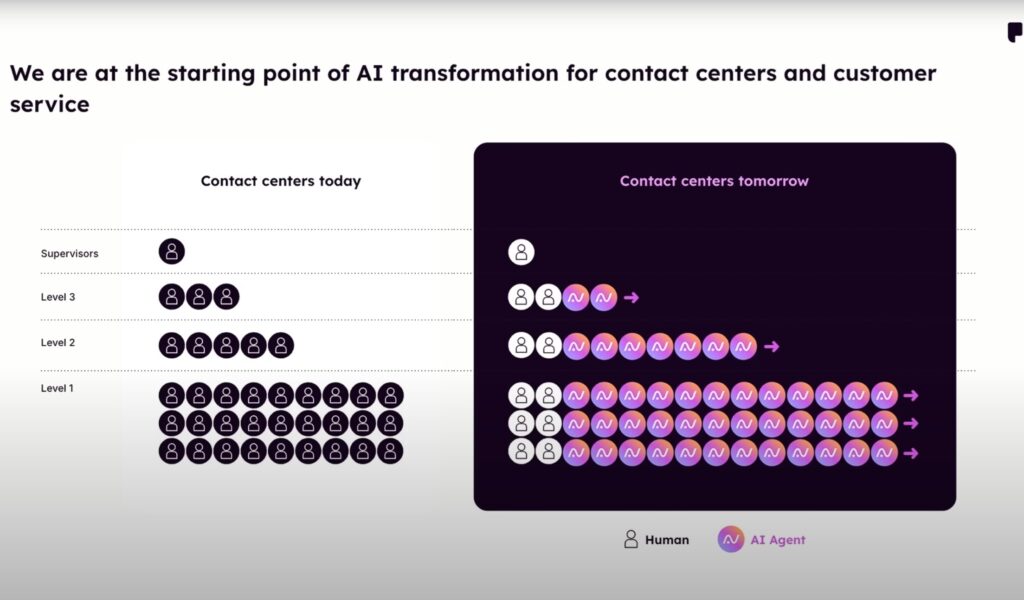

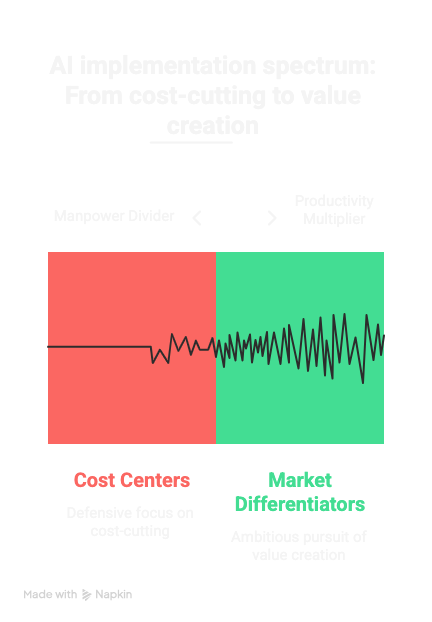

At its core, this reallocation stems from a fundamental choice I outlined in the first article of this “Navigating the Future” series (Digital Augmentation). Does a leader use AI as a manpower divider—achieving the same output with fewer people—or as a productivity multiplier, using the same workforce to accomplish vastly more? The layoffs we are witnessing suggest many are choosing the former.

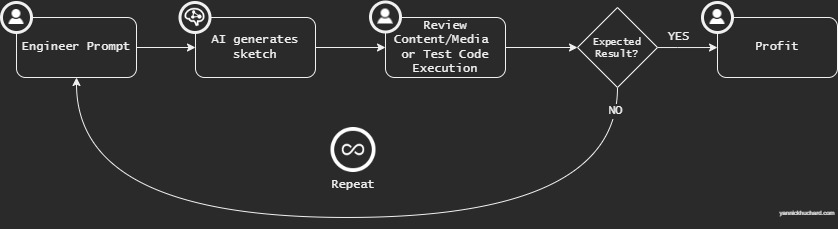

It’s a strategic crossroads where we see leaders diverging. The current wave of layoffs suggests many are choosing the former. However, a few forward-thinking leaders are charting the alternative path. A prime example is Shopify CEO Tobias Lütke, who, in a widely circulated memo, instructed his company to restrain hiring and instead embrace a new default: every employee must first exhaust AI as a solution before new headcount is considered. This is the productivity multiplier in action: transforming their own jobs to increase their capabilities and, by extension, the company’s.

And yet, this choice often ignores a fundamental truth I have observed in every organization I have worked with: there are no empty backlogs. There is always 10x more work to be done than the current team can handle, with ambitions that would require 100x the effort. A substantial reservoir of potential value lies untapped.

Just consider the functions often treated as cost centers—quality assurance, cybersecurity, compliance, and even employee wellness. With AI as a multiplier, these can be transformed into powerful market differentiators. A company’s decision here reveals its true vision: a defensive focus on short-term cost-cutting versus an ambitious pursuit of long-term value creation.

You have seen the headlines. Microsoft, IBM, Amazon, Salesforce, and Meta have all made significant cuts to their workforce. But the reduction is not, as many assume, primarily because AI is already there to replace workers like engineers, designers, marketers, HR, compliance specialists, and, proportionally, managers. The reality is that these layoffs are an anticipation of AI’s future power.

We are witnessing a strategic, system-wide efficiency exercise. Corporations are trimming their largest operational expenditure—salaries and their associated costs—to amass immense war chests of capital. This capital is being funneled directly into the single biggest prize in modern history: the development and deployment of Artificial General Intelligence (AGI) and, eventually, Superintelligence. It is a frantic race, and whoever gets there first will win the game.

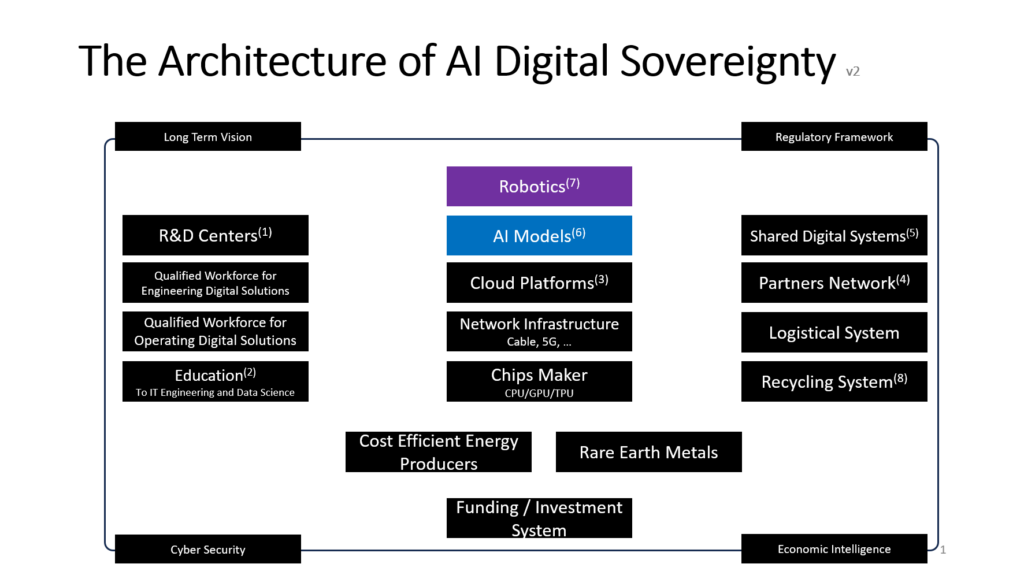

The contenders are clear: Google, leveraging decades of research from DeepMind and its powerful Gemini models; Meta, pushing the open-source frontier with Llama 4 and its JEPA world models; Elon Musk’s xAI and its unfiltered Grok; Anthropic’s safety-conscious Claude; and the colossal cloud platforms of Amazon and Microsoft. Underpinning this entire revolution is NVIDIA, the undisputed kingmaker providing the very infrastructure of inference with its GPUs. This is not, however, merely a Silicon Valley affair; it is a key battlefield in the techno-geopolitical power balance. China is rapidly closing the gap with formidable open-source contenders like DeepSeek‘s V3 reasoning models, Alibaba’s versatile Qwen family, and the surprise emergence of Moonshot AI’s Kimi K2, an exceptionally powerful agentic model. Meanwhile, Europe is striving for technological sovereignty with champions like France’s Mistral AI, which has gained significant traction by offering a powerful, open-weight alternative, followed by Aleph Alpha in Germany. This fierce global cycle of investment and innovation creates an unavoidable truth: intelligence itself is becoming a manufactured resource, destined to become hyper-reliable for executing complex tasks. And the disruption is not limited to knowledge work; Amazon’s deep investment in robotics signals a parallel transformation for physical labor.

This high-speed revolution, however, is largely a Big Tech phenomenon. The other 99% of the economy is not there yet. For most companies, the reality is far more challenging. This isn’t theoretical. In my own journey leading AI adoption in the banking sector—an industry I know very well—I witnessed the immense difficulty firsthand. It took a full year of relentless effort, starting with stemming the foundations of our AI-driven transformation from the Technology Office—aligning our most powerful change engines of Enterprise Architecture, Engineering, and Innovation—while simultaneously using the momentum from public AI discussions to help secure buy-in, engaging with the local tech ecosystem, and rallying a great team of curious, knowledgeable, and innovative people to push in the same direction and prove the value. And what I consistently see, whether in discussions with global consulting firms, specialized service providers, or businesses large and small, is a recurring, critical gap. And what I consistently see—whether in discussions with global consulting firms, specialized service providers, or businesses large and small—is a recurring, critical gap. And what I consistently see—whether in discussions with global consulting firms, specialized service providers, or businesses large and small—is a recurring, critical gap. This isn’t just my observation; it’s a reality confirmed by a major Microsoft and LinkedIn study, which found that while a commanding 79% of leaders feel AI adoption is critical to remaining competitive, a staggering 60% of them state that their company lacks a clear vision and plan to implement it. This disconnect highlights that most organizations simply lack the strong technological leadership and prepared workforce to manage such a transformation.

This gap is creating a powerful self-fulfilling prophecy. The belief in AI’s future profitability is compelling companies to lay off staff now to fund AI investment, which in turn accelerates the creation of the very technology that will make those roles redundant later. The engine of this prophecy is the eternal drive for shareholder value. And make no mistake—as an investor in the stock market, that engine is partially driven by you.

Be Aware of and Leverage the Delta (Δ)

Do you feel it? That persistent sense, ever since you were a teenager, that whatever the direction, life and society were always demanding more?

- More study to get a better job.

- More work to get a better salary.

- More exercise on a regular basis just to stay in shape.

- More training during your job to remain compliant and try to stay ahead.

Not only that, have you noticed that whatever you do, there is a rampant system that constantly pushes the rate of change itself? Like inflation that drives prices up, requiring higher salaries or forcing you to lower your living standards. Or the price of housing that keeps climbing, so you have a hard time buying your house—always hoping a better opportunity will come later, which never does, because when prices are low, mortgage rates are high. Your job is always requiring new skills because some technology or method is no longer efficient enough, or not trendy anymore—like the shift from Waterfall to Agile that suddenly rendered a Prince 2 certification seemingly obsolete. And why is everything about AI now? You feel you barely understood Crypto and Blockchain.

This, ladies and gentlemen, is what I call the Delta (Δ), inspired by the mathematical symbol representing the function of change.

The Delta is always on. It can never be turned off. It is not a bug; it is a feature of our modern world, hardwired into the very dynamics of market economies and the core of human psychology. We all want a better life, a higher standard of living, and we operate in a competitive environment of businesses whose primary reason for existing is to grow. Therefore, you have a choice. You can resist the Delta and be broken by it, or you can accept it. Embrace it. Change your perspective on it, and learn to ride the wave. You must ride it until we, as a global society, reach a point—through a provoked agreement or a catastrophe—where we decide that the Delta can only push the human psyche and nations as a whole so far.

Your Blueprint for Lasting Value in the New Economy

Many leaders look at this disruption and immediately jump to solutions like Universal Basic Income (UBI). Let me be unequivocally clear on where I stand: while I hold that unconditional support for those left without work is a fundamental pillar of a humane society, my critique of UBI is that it acts as a patch on a structural fracture. It addresses the symptom—a lack of income—while ignoring the deeper, coming crisis of agency and purpose. Furthermore, it completely sidesteps the great economic equation of our time: the widening disconnect between the effort a task requires, the value that work creates, and the way it is ultimately remunerated. It fosters dependency when the strategic imperative must be to cultivate autonomy.

The true path forward is not merely to distribute the spoils of this technological revolution, but to democratize the very means of its creation. The superior strategy is empowerment through universal access to the foundational tools of the new economy. This means powerful open-source AI and cheap, abundant computing, delivered as a utility service as fundamental and reliable as electricity or the telephone network. This is the architecture for genuine self-sovereignty, the preservation of dignity, and the creation of true equality of opportunity. After all, this new form of intelligence was trained on the collective data of humanity. Why, then, shouldn’t the tool itself be given back to us all?

That is the ideal, but you operate in the now. The Great Reallocation is already reshaping your reality, so while we strive for that future, you must secure your place in the present. This starts with an *upgrade* in how you view yourself.

Your survival and success hinge on a single, powerful concept: you must productize your craft and your uniqueness. This is no longer just advice for freelancers or entrepreneurs; it is the new imperative for anyone who is employed and wants to remain so.

In my work building and running businesses, I have come to a critical realization: the framework for launching a successful venture, which I codified in the AMASE Startups method, is no longer just for startups. It has become the operating manual for the individual. The battlefield has changed, and the strategies that build resilient companies are now the very same strategies that must build a resilient career.

Consider how each dimension now applies directly to you:

- Your Personal Operating System (The Business Dimension): This is your strategic self. How do you operate? What is your unique value proposition, your personal business model that you bring into the larger organization? This is your architecture for creating value.

- Your Craft as a Product (The Product Dimension): This is where you manage your unique expertise with the discipline of a product manager. It is the sum of your evolving competencies, your mastery of technology, and the tangible quality of your work. In this new market, your craft is the product on offer, and you must be relentless in its upgrades and iterations.

- Your Cultural Signature (The Culture Dimension): This is the unique environment you initiate through your perspective, personality, speech, and actions. It is the set of principles that governs your work and interactions, creating a powerful and singular element of your moat that attracts those who resonate with your way of being.

- Your Signal in the Noise (The Visibility Dimension): This is your personal brand, your discoverability. In a world saturated with information, how do you broadcast your value? It is your network, your reputation, your documented successes—your ability to be found by those who need your unique solution.

- Your Economic Sovereignty (The Finance Dimension): This is your financial autonomy. It is your understanding of the economic value you generate, your skill in negotiating your worth, and your strategy for building financial independence beyond a simple paycheck.

Let this paradigm shift settle in, for it is the new law of professional gravity. The rule is simple: You are not an employee. You are a sovereign enterprise.

The Urgency of This New Reality

Why is embracing this shift feels so urgent? Because it presents you with a stark choice, a decisive fork in your professional destiny.

On one path, you become the architect of your own value, running your career with the discipline and foresight of a competitive business. You understand that your competition is not only between people, but with a holistically transformative technology that is redefining the very rules of the game.

The other path is one of passive resistance and inaction. It is the path where you undergo the pressure of assimilation. On this path, your complex cognitive skills are not just devalued; they are disaggregated—broken down into autonomous, independent units of work ready to be executed by artificial intelligence. Your holistic expertise is commoditized into a collection of tasks, becoming the new blue-collar labor of the information age. On this path, you become a cog in a system, pressured by other humans who are themselves obsessed with cost efficiency and keeping the OPEX down. In their world, you cease to be a strategic asset and become an adjustable variable in an Excel formula.

This is not a distant threat. It is the acceleration of an existing dehumanization. While this mindset only represents a fraction of corporate culture, it is a powerful and growing one. And for the first time, this new paradigm gives you the power to consciously outmaneuver it.

Your Immediate Action Plan: The Four Pillars & Three Habits

To become the architect of your own value, you must build your enterprise of one on four foundational pillars, reinforcing them with three non-negotiable habits.

Pillar 1: Evolve into the T-Shaped Orchestrator

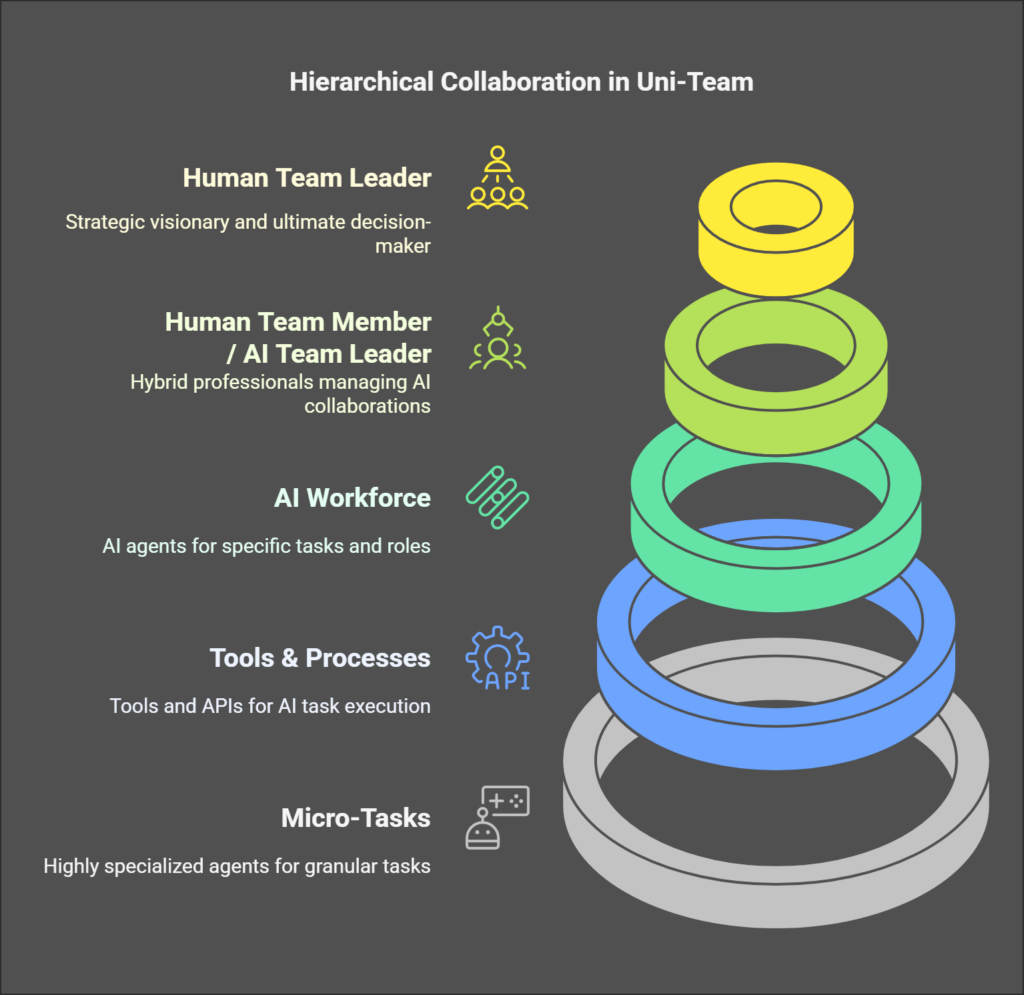

The future does not belong to the shallow generalist—the “jack of all trades, master of none.” That model is obsolete. The new baseline for relevance is the T-shaped professional. This is an individual who grounds their broad, cross-functional knowledge (the horizontal bar of the T) in at least one pillar of deep, specialized expertise (the vertical stem of the T).

This distinction is important. As AI rapidly commoditizes generic, student-level knowledge, it effectively levels the playing field for anyone without a defensible specialization. Your deep expertise is the anchor that gives you the gravity and perspective to manage the broader landscape. It is the backbone that allows you to become an effective Orchestrator.

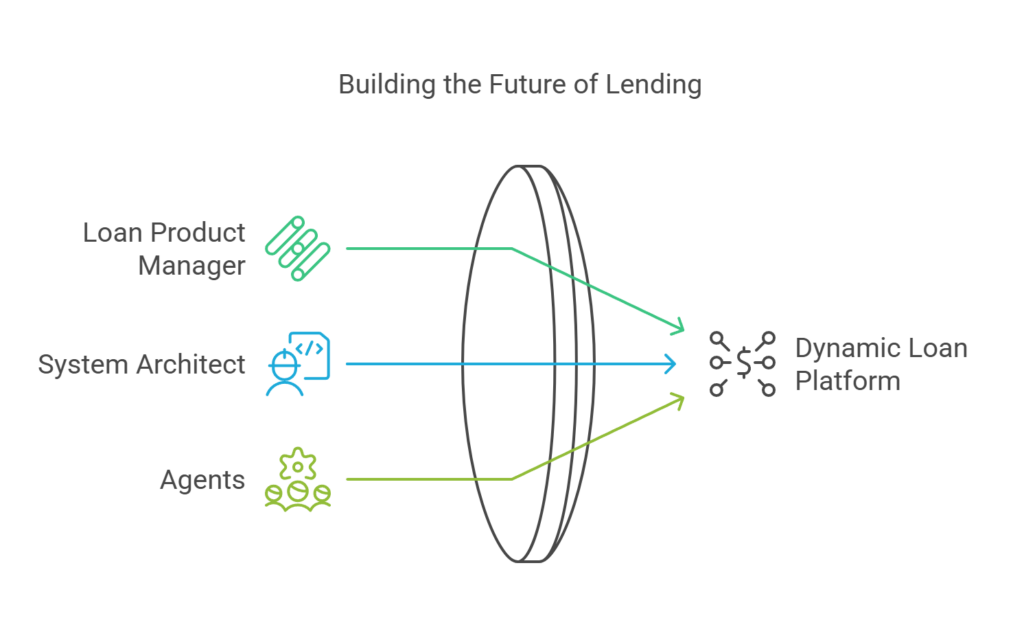

Your value will no longer be defined by a single, siloed skill, but by your capacity to manage a portfolio of outcomes by conducting a symphony of specialized intelligences. You will lead hybrid teams where highly specialized human experts work in concert with a new class of digital colleague: the hyper-efficient AI Agent. The power lies not in doing, but in orchestrating from a position of deep knowledge.

Imagine you are leading a project to launch a new IT application. Your role is that of the central conductor. You will:

- Deploy a marketing agent to run a dynamic and targeted social media campaign.

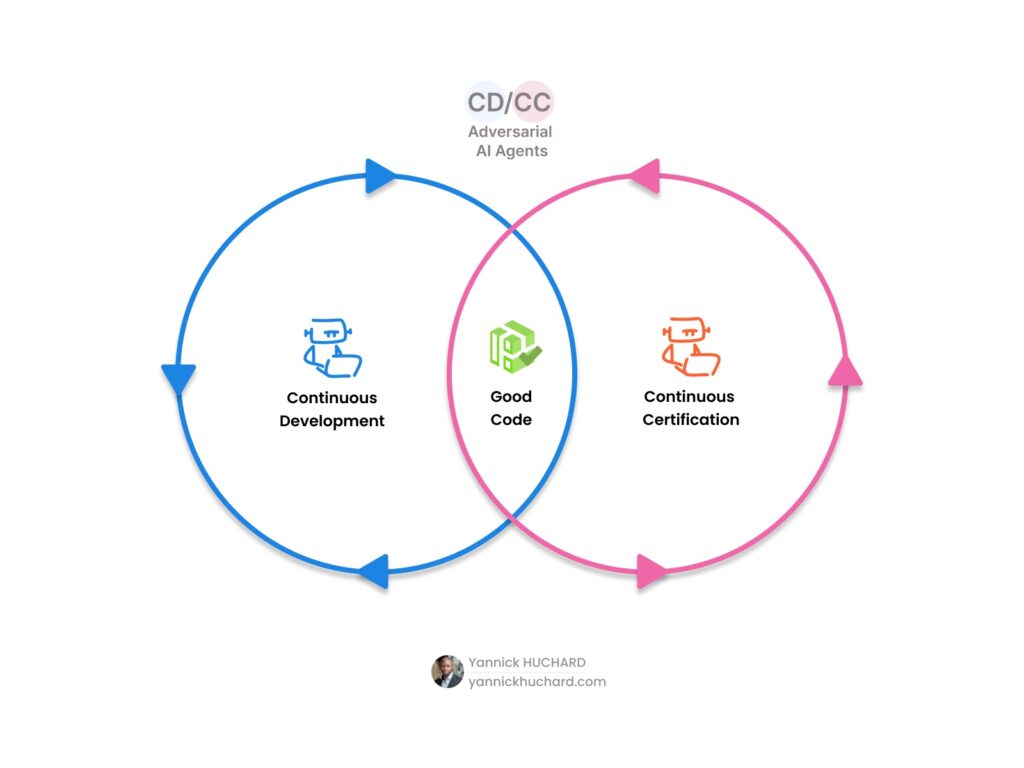

- Task an adversarial AI to act as your “red team,” relentlessly probing your application for security vulnerabilities.

- Direct another agent to instantly construct a perfectly formatted product sheet from complex technical specifications.

- And assign yet another to build and manage a customer survey and feedback system.

This role requires more than just project management; it demands a holistic understanding of the entire value chain—from customer journey to final delivery.

The Elite Advantage: Evolving to the PI-Shaped (Π)

For those who wish not just to thrive but to gain a truly dominant position in the post-AI economy, achieving a T-shape is the most decisive milestone. Yet, there is a higher level of evolution that confers an almost insurmountable advantage: becoming a Π-shaped (Pi-shaped) professional.

As I’ve detailed in my work on identifying rare talent, a Π-shaped professional builds on two deep pillars of specialization—for instance, one in a business domain like finance and another in a technology domain like data science. What gives this structure its immense power is the arch connecting these pillars: a mastery of an interdisciplinary practice, such as Enterprise Architecture and Project Management, which enables them to synthesize disparate fields into a single, coherent vision.

These individuals have a natural head start in the new economy. They are already wired to be the nexus, the strategic hub that can translate deep business needs into complex technological solutions, making them the ultimate Orchestrators. This is the aspirational path for those determined to lead.

Pillar 2: Build Your Moat on Experience, EQ & Artistry

As AI commoditizes IQ-based tasks, your human essence becomes your greatest differentiator.

- The Emotional Quotient (EQ) Moat: This is your ability to collaborate, inspire, and add to a team’s cohesion. Destructive, selfish behaviors will become terminal liabilities.

- The Artistic Factor: Your unique creative voice—your aesthetic sense, your storytelling, your capacity for original expression—is a beacon of distinction in a world of uniformity.

- Your Personal Intellectual Property (IP): This is your most critical asset. It is the sum of your unique methods, success recipes, custom templates, and strategic frameworks forged from your direct experience and “battle scars.”

These elements combine to create your ultimate moat: The Experience.

A few years ago, Wouter Blokdijk, an eminent Architect who used to lead the Architecture Studio and ACOM—an event for and by the vibrant architect and engineer communities at ING—gave a memorable presentation about the power of “Stages.” It stuck with me. The power wasn’t only about the immense effort and the meaning of giving others a platform to express themselves, tell their story, and share knowledge. It wasn’t just about creating a platform that could be standardized. It was about the power to make experiences possible—experiences that touch both the rational and the emotional sides of our brain. This made me realize that Experience is the ultimate moat in the age of AI.

The Experience is what sets you apart from every other player on the market. We all know we need a smartphone to manage our lives, so why do we get so emotionally tense throwing arguments between a Samsung, an iPhone, a Google phone, or a Huawei? You’ve guessed it: the experience. You are experiencing a different feeling, a different dialog with the company and its community. The brand, this collective identity, this palette of sentiment—it feels different. And that difference matters. The product design above the functions, wrapped in an experience, matters. The story, and how you tell it, matters.

Another dimension of this moat, which is profoundly human, lies in the realm of sensory value.

Think about that feeling when you enter a French bakery. You are welcomed warmly by the “boulangère,” and immediately enveloped by a symphony of smells—the crisp baguette, the buttery “pain au chocolat,” the sweet “tarte aux pommes.” You chit-chat for a moment while ordering a sandwich made with fresh vegetables and bread straight from the oven, perhaps with a dollop of handmade mayonnaise, and you add a bag of light, sugary “chouquettes” for dessert. You say goodbye, and the whole encounter leaves you with a deep feeling of satisfaction, already anticipating your next visit.

This experience is unique, irreplaceable, and memorable. For the boulangère, the bakery is her “Stage.”

Her expertise lies in taste and scent, but the principles are universal—the touch and feel of a bespoke garment, the carefully curated ambiance of a store, the soul of high-end gastronomy. These are innovations that make sense primarily from human to human. Of course, AI can assist in the research and production of these things, but it cannot replace the human perspective required to truly understand them. Because ultimately, to empathize, communicate, sell, and bring value in the sensory world, you need the one thing an AI will never possess: a human body and the lived experience that comes with it.

Pillar 3: Embrace Entrepreneurship

The traditional career ladder (including the middle management layer) is being challenged. The future belongs to the entrepreneur, and this identity now takes many forms.

- It can be the ‘solopreneur,’ a sovereign agent leveraging their unique expertise in the open market.

- It can be the ‘founder,’ who rallies a team to build a new company from the ground up.

- And critically, it can be the ‘intrapreneur’—the employee who acts as an agent of change, architecting new ventures and driving innovation from within the walls of their existing organization.

Whichever path you choose, the underlying mindset is the same: it is about proactively creating and capturing value, not just fulfilling a pre-defined role. It is about building constructive solutions that push your nation, society, and humanity forward.

While this path has traditionally involved navigating complex administration, the very forces driving this new economy are lowering the barriers to entry. The proof lies in the massive capital flowing not just to the tech titans, but to a new generation of agile, visionary startups. In Europe, for instance, France’s Mistral AI has mounted a formidable challenge to the US giants, raising over €600 million by providing powerful open-weight AI models and proving that strategic innovation can attract world-class investment. Meanwhile, UK-based Wayve is revolutionizing transportation, securing over $1 billion in a landmark funding round to build ’embodied AI’ for truly autonomous vehicles that can learn and adapt to any environment.

This lowering of barriers isn’t just financial; it’s profoundly technological. The advent of Generative AI and Augmented Coding (also known as Vibe Coding) is ushering in a no-code revolution. Building websites, applications, and other kinds of software is no longer the exclusive domain of specialist coders. Instead, you can architect solutions using natural language prompts in your own language. Pioneering platforms like Replit, Bolt.new, and Firebase.studio are taking this even further, abstracting away the complexities of the backend by managing your infrastructure for you.

Considering an application of moderate complexity, traditional barriers are evaporating. Your imagination, your focus, and your available time are now the primary constraints on what you can create.

Pillar 4: Be a Discoverer

Research is hot, trending, and now acknowledged as a major instrument of geopolitical soft power.

The new global currency is not just capital; it is research talent, with nations actively competing to attract and retain the world’s sharpest minds. Look no further than the race for doctorates, where China now graduates more STEM PhDs annually than the United States, creating a seismic shift in the global talent landscape. This arms race for talent is mirrored in the explosive output of their work.

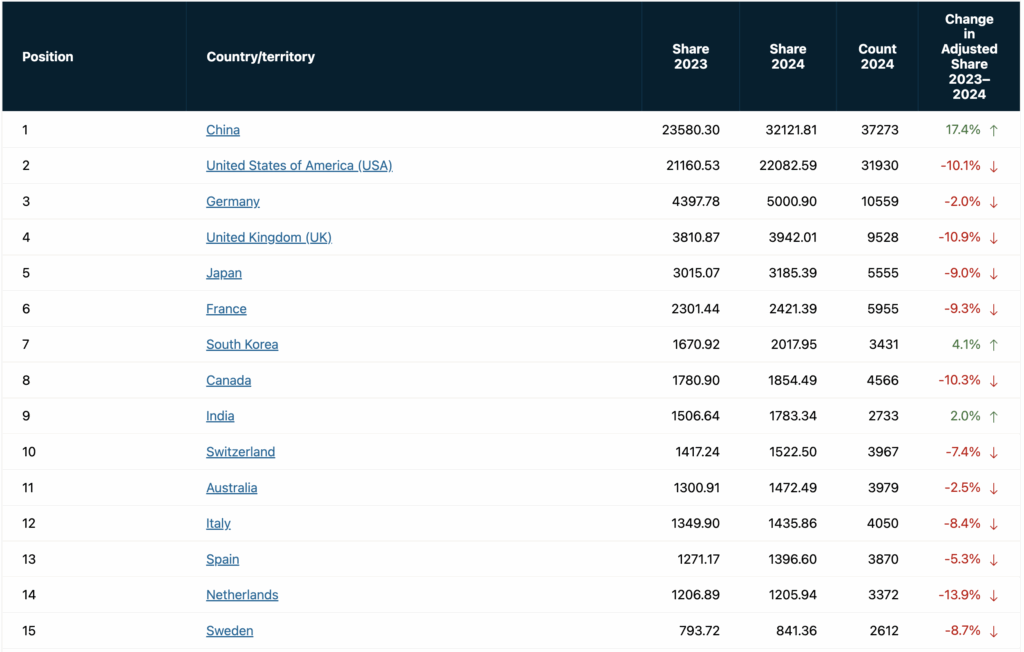

This trend is not a matter of debate; it is a statistical reality, quantified with stunning clarity by the Nature Index 2025. The report confirms that China now decisively leads the world in high-quality research output, ahead of the US, Germany, the UK, and Japan. But the real story is in the momentum: China’s contribution surged by an incredible +17.4% in a single year (from 2023 to 2024). To put its lead into perspective, China’s output of high-quality publications is now over 5,343 points higher than the second-place United States and more than 26,714 points ahead of third-place Germany.

The Stanford Institute for Human-Centered AI’s 2025 report, for instance, highlights this exponential growth, showing that the number of AI publications has more than doubled since 2010, demonstrating a relentless acceleration of discovery.

This academic explosion has a practical, even more chaotic, counterpart. Consider the number of AI models published on Hugging Face, the de facto “super-marketplace” for the global AI community. As of today, the platform’s model count has skyrocketed, adding nearly one million new models in just the past nine months (1898890 in July 2025). It is a cognition explosion, happening in real-time.

This macro-trend finds its corporate manifestation in a “war for brains” raging between Google, Meta, OpenAI, and Microsoft. The simple act of recruitment has evolved into a high-stakes talent transfer market akin to that for FIFA and NBA stars, with compensation packages reaching into the hundreds of millions. Consider that the deals for elite AI researchers now exist in the same stratosphere as Kylian Mbappé’s estimated €320 million with Real Madrid across five seasons or Jaylen Brown’s landmark five-year, $304 million contract with the Boston Celtics.

Look no further than Microsoft’s 2024 deal to hire Mustafa Suleyman and the majority of his Inflection AI team—an unconventional “acqui-hire” valued at over $1 billion when accounting for licensing and other fees. This move was mirrored in mid-2025, when Meta poached Alexander Wang from Scale AI as if capturing a Mythical Pokémon—exceptionally rare, strategically crucial, and emblematic of a deeper ambition—to lead their newly formed ‘Superintelligence’ team, as part of a broader strategic investment involving a $14.3 billion (49%) stake in Scale AI. In both instances, these were not simple talent acquisitions; they were strategic investments in the very capacity for future breakthroughs and driving the “road to Artificial SuperIntelligence (ASI)”.

This dynamic extends far beyond just AI. It is the same in healthcare, with bio-engineers and researchers in genomics developing tools to revolutionize health. It is the same in defense and even in foundational science with the race for quantum computing. The competition for highly qualitative minds—people able to work in cutting-edge research teams—is the real invisible war. The goal of these teams is to produce the papers, the patents, and the commercial intellectual property that create a true, unassailable competitive advantage—a quantum leap of insight that remains, for now, far beyond the creative potency of any AI. To position yourself here, among the discoverers, is to place yourself at the highest and most secure echelon of the new economy.

Yet, even this moat is not eternal. We must acknowledge the stated ambitions of leaders like OpenAI’s Sam Altman, who openly seek to build AI models capable of making novel scientific discoveries themselves. We are not there yet, but it is a frontier to be watched with active vigilance.

The Three Foundational Habits

Acting on this framework requires discipline. These three habits are not just suggestions; they are the new requirements for professional survival and relevance.

But before we detail them, let’s observe how the future of work is already unfolding through clear, undeniable trends:

- The Normalization of Personal AI: Personal AI assistants are rapidly becoming the norm in our lives. For our ten-year-old children, growing up with an AI will be as natural as it was for millennials to grow up with a smartphone.

- The Incremental UI Absorption: Specialized application interfaces will gradually be absorbed by these personal AI assistants. Through API integration, advanced protocols for context-sharing (MCP) and agent-to-agent communication (A2A), these assistants will be able to reason across multiple applications and data sources, becoming a single, conversational front-end for our digital lives.

- The Persistence of Unreliability: Despite advances like web search grounding, thinking models, and Retrieval-Augmented Generation (RAG), Large Language Models (LLM) still hallucinate. We must remember that their output is a synthesis of other humans’ content, which is not the same as verified, truthful fact.

- The Law of Exponential Progress: The technology is only getting better, faster, and more potent. The performance gap between 2020’s GPT-3 and today’s state-of-the-art models is not just an iteration; it’s a light-year leap in capability.

Considering this new reality, I invite you to strengthen your sovereign agency with these four foundational practices:

- Sovereign Critical Thinking: This is the essential safeguard. You must cultivate a healthy skepticism towards AI-generated content and, more importantly, towards the claims of people and enterprises leveraging AI at scale—especially those operating in the “High-Risk AI” category defined by frameworks like the EU AI Act. This is about preventing lazy reasoning and refusing to outsource your judgment. The “how” of a process is often easier to challenge than the “what” of a stated fact, yet to build a true capability, you need to master both. Honing your critical thinking makes you a more discerning user of AI, which in turn increases the velocity of your own training and gives you an edge faster than those who accept its output uncritically.

- Continuous Learning: This is paramount because the Delta never stops. You must leverage modern tools to your advantage, using AI itself as an engine for comprehension. Dive into platforms like ChatGPT and the information streams on X to accelerate your learning and keep pace with a world that refuses to stand still. This is your first line of defense against obsolescence.

- Continuous Practice: This is where theory is forged into capability. It is not enough to think you know how something is done; you must know how to do it through direct, relentless application. Practice is how you accumulate the concrete examples, the case studies, and the definitive experience that form the bedrock of your personal IP. It is through doing that you gain the tangible proof of your value.

- Engineered Serendipity: In a world overflowing with noise, you cannot simply wait to be found; you must engineer the conditions for opportunity to come to you. This isn’t about shouting louder than everyone else; that is a defective and inefficient strategy. True serendipity is engineered by building a believable value proposition rooted in the tangible assets you created through practice. It is the deliberate combination of your sovereign thinking, your continuous learning, and your proven experience that creates a gravitational pull for the most meaningful opportunities, allowing you to be “picked” when it matters most.

In a world that seeks to commoditize your talent into a line item in an Excel formula, becoming the architect of your own enterprise is the ultimate expression of sovereign agency and the only way to truly ride the Delta.

But the Delta, as powerful as it is, is not the ultimate source. It is probably the most visible expression of a deeper, more fundamental law of our hyper-connected world: The Law of the Equilibrium Imperative. In a future article, we will dive into this foundational principle and its one immutable rule: a system will always find a new equilibrium, and you can either be a willing architect of it or a casualty of the adjustment.